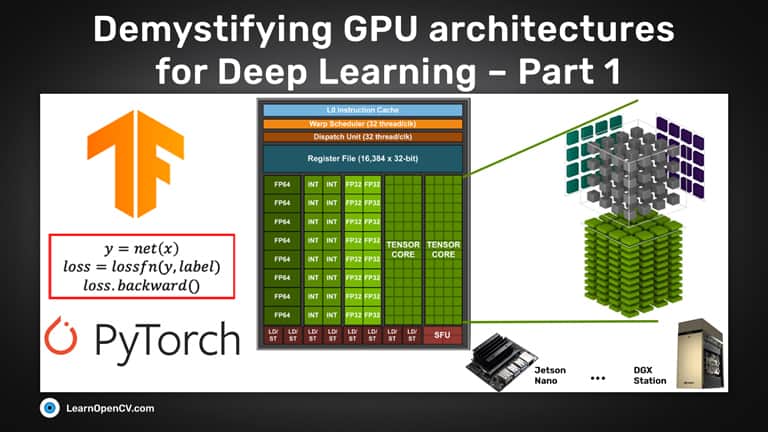

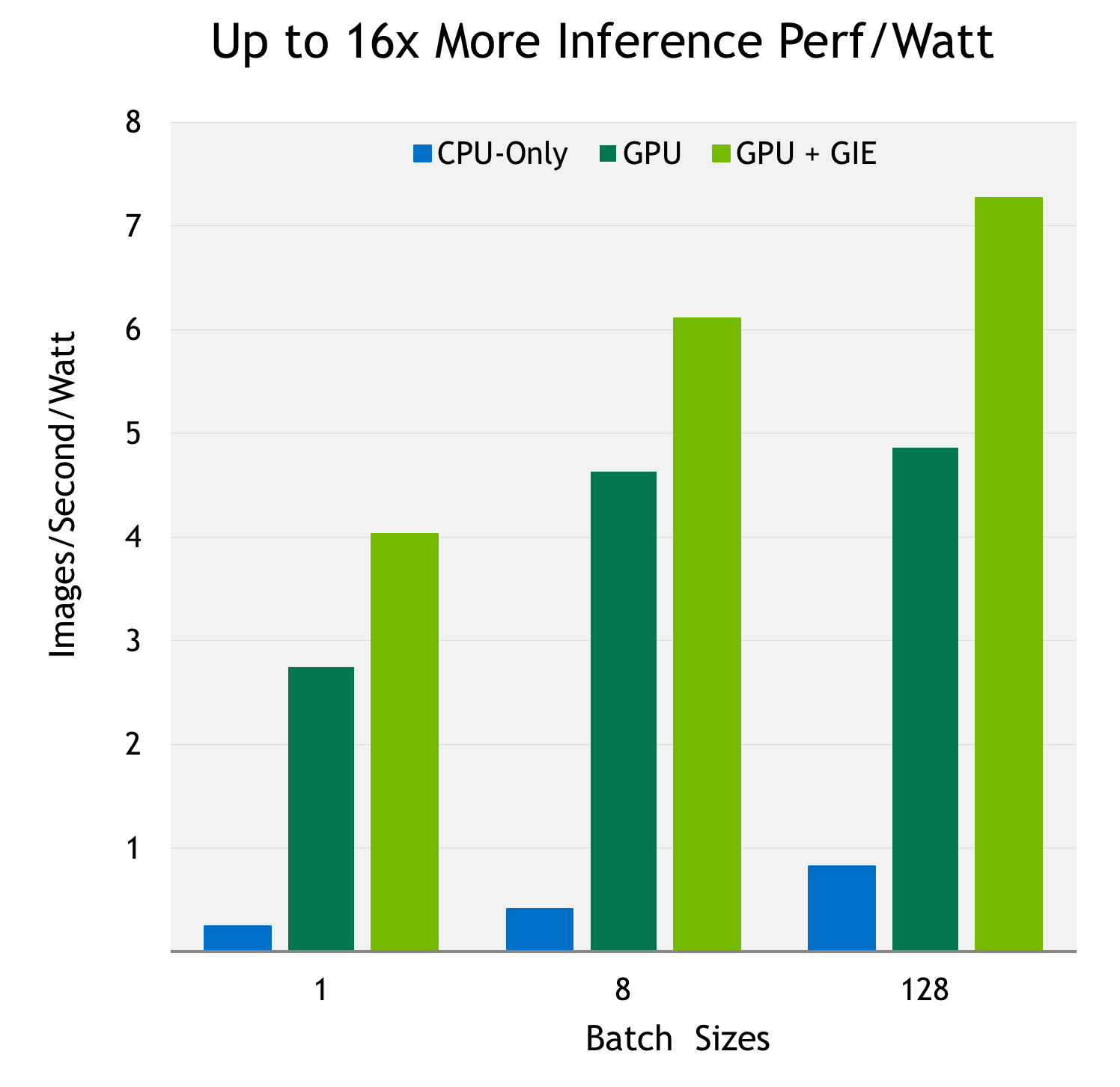

RTX 2060 Vs GTX 1080Ti Deep Learning Benchmarks: Cheapest RTX card Vs Most Expensive GTX card | by Eric Perbos-Brinck | Towards Data Science

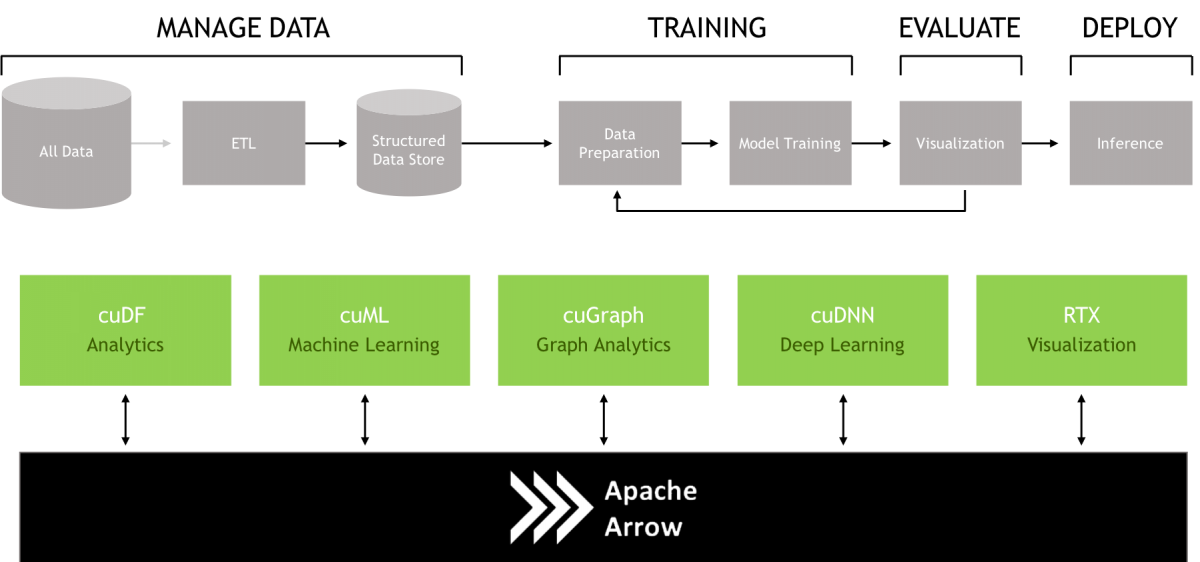

Ο χρήστης NVIDIA AI στο Twitter: "Build GPU-accelerated #AI and #datascience applications with CUDA python. @nvidia Deep Learning Institute is offering hands-on workshops on the Fundamentals of Accelerated Computing. Register today: https://t.co ...